AI transparency: What is it and why do we need it?

As the use of AI models has evolved and expanded, the concept of transparency has grown in importance. Learn about the benefits and challenges of AI transparency.

Transparency in AI refers to the ability to peer into the workings of an AI model and understand how it reaches its decisions. There are many facets of AI transparency, including the set of tools and practices used to understand the model, the data it is trained on, the process of categorizing the types and frequency of errors and biases, and the ways of communicating these issues to developers and users.

The multiple facets of AI transparency have come to the forefront as machine learning models have evolved. A big concern is that more powerful or efficient models are harder -- if not impossible -- to understand since the inner workings are buried in a so-called black box.

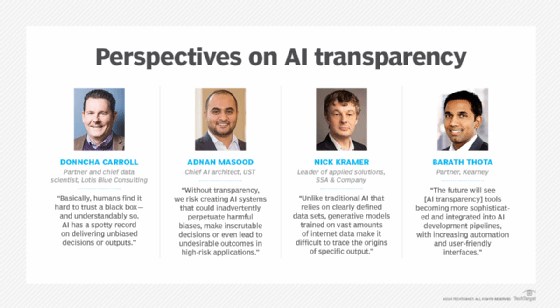

"Basically, humans find it hard to trust a black box -- and understandably so," said Donncha Carroll, partner and chief data scientist at business transformation advisory firm Lotis Blue Consulting. "AI has a spotty record on delivering unbiased decisions or outputs."

What is AI transparency?

Like any data-driven tool, AI algorithms depend on the quality of data used to train the AI model. Therefore, they are subject to bias or have some inherent risk associated with their use. Transparency is therefore essential to securing trust from the user, influencers or those influenced by the decision.

This article is part of

A guide to artificial intelligence in the enterprise

"AI transparency is about clearly explaining the reasoning behind the output, making the decision-making process accessible and comprehensible," said Adnan Masood, chief AI architect at UST, a digital transformation consultancy. "At the end of the day, it's about eliminating the black box mystery of AI and providing insight into the how and why of AI decision-making."

Trust, auditability, compliance and understanding potential biases are some of the fundamental reasons why transparency is becoming a requirement in the field of AI. "Without transparency, we risk creating AI systems that could inadvertently perpetuate harmful biases, make inscrutable decisions or even lead to undesirable outcomes in high-risk applications," Masood said.

Working with explainability and interpretability

AI transparency works hand in hand with related concepts like AI explainability and interpretability, but they are not the same.

AI transparency helps ensure that all stakeholders can clearly understand the workings of an AI system, including how it makes decisions and processes data. "Having this clarity is what builds trust in AI, particularly in high-risk applications," Masood said.

By contrast, explainability focuses on providing understandable reasons for the decisions made by an AI system. Interpretability refers to the predictability of a model's outputs based on its inputs. So, while explainability and interpretability are crucial in achieving AI transparency, they alone don't wholly encompass it.

AI transparency also involves being open about data handling, the model's limitations, potential biases and the context of its usage.

Ilana Golbin Blumenfeld, responsible AI lead at PwC, noted that process transparency and data and system transparency can complement interpretability and explainability.

Process transparency entails providing documentation and logging of significant decisions made throughout the development and implementation of a system. And it includes the structure of governance and testing practices.

Data and system transparency entails communicating to users or relevant parties that an AI or automated system will use their data. It also alerts users when they directly engage with an AI like a chatbot.

Why is AI transparency needed?

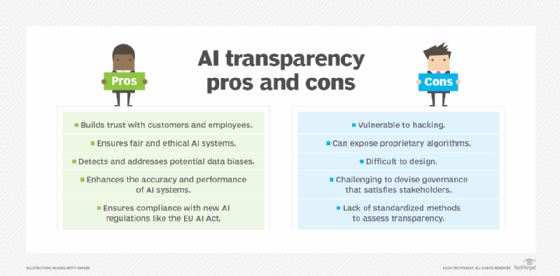

AI transparency, as noted, is vital to fostering trust between AI systems and users. Manojkumar Parmar, CEO and CTO at AIShield, an AI security platform, said some of the top benefits of AI transparency include the following:

- Builds trust with customers and employees.

- Ensures fair and ethical AI systems.

- Detects and addresses potential data biases.

- Enhances the accuracy and performance of AI systems.

- Ensures compliance with new AI regulations like the EU AI Act.

Tradeoffs of black box AI

AI is typically measured in percentages of accuracy -- i.e., to what degree the system gives correct answers. Depending on the task at hand, the minimum accuracy required might vary, but accuracy, even if it is 99%, cannot be the only measure of AI's value. Organizations must also account for a major shortcoming of AI, especially when applying AI in business: An AI model with near-perfect accuracy can be problematic. As the accuracy of the model goes up, AI's ability to explain why it arrived at a certain answer goes down, raising an issue companies must confront: the lack of AI transparency of the model and, therefore, our human capacity to trust its results.

The black box problem was acceptable to some degree in the early days of AI technology, but lost its merit when algorithmic bias was spotted. For example, AI that was developed to sort resumes disqualified people for certain jobs based on their race, and AI used in banking disqualified loan applicants based on their gender. The data the AI was trained on was not balanced to include sufficient data of all kinds of people, and the historical bias that lived in the human decisions was passed to the models.

A near-perfect AI model can still make alarming mistakes, such as classifying a stop sign as a speed limit sign.

While these are some of the more extreme cases of misclassification -- and include some purposely designed adversarial inputs to fool the AI model -- they still underline the fact that the algorithm has no clue or understanding of what it is doing. AI follows a pattern to arrive at the answer, and the magic is that it does so exceptionally well, beyond human power. For the same reason, unusual alterations in the pattern make the model vulnerable, and that's why AI transparency is needed -- to know how AI reaches a conclusion.

When using AI for critical decisions, understanding the algorithm's reasoning is imperative. An AI model designed to detect cancer, even if it is only 1% wrong, could threaten a life. In cases like these, AI and humans need to work together, and the task becomes much easier when the AI model can explain how it reached a certain decision. Transparency in AI makes it a team player.

Sometimes, transparency is necessary from a legal perspective.

"Some of the regulated industries, like banks, have model explainability as a necessary step to get compliance and legal approval before the model can go into production," said Piyanka Jain, president and CEO at data science consultancy Aryng.

Other cases involve GDPR or the California Consumer Privacy Act, where AI deals with private information. "An aspect of GDPR is that, when an algorithm using an individual's private data makes a decision, the human has the right to ask for the reasons behind that decision," said Carolina Bessega, innovation lead, Office of the CTO at Extreme Networks.

Weaknesses of AI transparency

As it seems evident that AI transparency has many benefits, why aren't all algorithms transparent? Because AI has the following fundamental weaknesses:

- Vulnerable to hacking. Transparent AI models are more susceptible to hacks, as threat actors have more information on their inner workings and can locate vulnerabilities. To mitigate these challenges, developers must build their AI models with security top of mind and test their systems.

- Can expose proprietary algorithms. Another concern with AI transparency is protection of proprietary algorithms, as researchers have demonstrated that entire algorithms can be stolen simply by looking at their explanations.

- Difficult to design. Lastly, transparent algorithms are harder to design, in particular for complex models with millions of parameters. In cases where transparency in AI is a must, it might be necessary to use less sophisticated algorithms.

- Governance challenges. Another fundamental weakness is assuming any transparency method out of the box will satisfy all needs from a governance perspective, Blumenfeld cautioned. "We need to consider what we require for our systems to be trusted, in context, and then design systems with transparency mechanisms to do so," she explained. For example, it's tempting to focus on technical methods of transparency to enforce interpretability and explainability. But these models might still rely on users to identify biased and inaccurate information. If an AI chatbot cites a source, it's still up to the human to determine whether the source is valid. This takes time and energy and leaves room for error.

- Lack of standardized methods to assess transparency. Another issue is that not all transparency methods are reliable. They may generate different results each time they are performed. This lack of reliability and repeatability might reduce trust in the system and hinder transparency efforts.

As AI models continuously learn and adapt to new data, they must be monitored and evaluated to maintain transparency and ensure that AI systems remain trustworthy and aligned with intended outcomes.

How to reach a balance

As with any other computer program, AI needs optimization. To do that, we look at the specific needs of a problem and then tune our general model to fit those needs best.

When implementing AI, an organization must pay attention to the following four factors:

- Legal needs. If the work requires explainability from a legal and regulatory perspective, there may be no choice but to provide transparency. To reach that, an organization might have to resort to simpler but explainable algorithms.

- Severity. If the AI is used in life-critical missions, transparency is a must. It is most likely that such tasks are not dependent on AI alone, so having a reasoning mechanism improves teamwork with human operators. The same applies if AI affects someone's life, such as algorithms used for job applications. On the other hand, if the AI task is not critical, an opaque model would suffice. Consider an algorithm that recommends the next prospect to contact come from a database of thousands of leads -- cross-checking the AI's decision would simply not be worth the time.

- Exposure. Depending on who has access to the AI model, an organization might want to protect the algorithm from unwanted reach. Explainability can be good even in the cybersecurity space if it helps experts reach a better conclusion. But if outsiders can gain access to the same source and understand how the algorithm works, it might be better to go with opaque models.

- Data set. No matter the circumstances, an organization must always strive to have a diverse and balanced data set, preferably from as many sources as possible. AI is only as smart as the data it is trained on. By cleaning the training data, removing noise and balancing the inputs, we can help to reduce bias and improve the model's accuracy.

Best practices for implementing AI transparency

Implementing AI transparency -- including finding a balance between competing organizational aims -- requires ongoing collaboration and learning between leaders and employees. It calls for a clear understanding of the requirements of a system from a business, user and technical point of view. Blumenfeld said that by providing training to boost AI literacy, organizations can prepare employees to actively contribute to identifying flawed responses or behaviors in AI systems.

The tendency in AI development is to focus on features, utility and novelty, rather than safety, reliability, robustness and potential harm, Masood said. He recommends prioritizing transparency from the inception of the AI project. It's helpful to create datasheets for data sets and model cards for models, implement rigorous auditing mechanisms and continuously study the potential harm of models.

Key use cases for AI transparency

AI transparency has many facets, so teams must identify and examine each of the potential issues standing in the way of transparency. Parmar finds it helpful to consider the following use cases:

- Data transparency. This encompasses understanding the data feeding AI systems, a crucial step in identifying potential biases.

- Development transparency. This involves illuminating the conditions and processes in AI model creation.

- Model transparency. This reveals how AI systems function, possibly by explaining decision-making processes or by making the algorithms open source.

- Security transparency. This assesses the AI system's security during development and deployment.

- Impact transparency. This evaluates the effect of AI systems, which is achieved by tracking system usage and monitoring results.

The future of AI transparency

AI transparency is a work in progress as the industry discovers new problems and better processes to mitigate them.

"As artificial intelligence adoption and innovation continues its surge, we'll see greater AI transparency, especially in the enterprise," Blumenfeld predicted. But the approaches to AI transparency will vary depending on the needs of a given industry or organization.

Carroll predicts that insurance premiums in domains where AI risk is material could also shape the adoption of AI transparency efforts. These will be based on an organization's overall system risk and evidence that best practices have been applied in model deployment.

Masood believes regulatory frameworks likely will play a vital role in the adoption of AI transparency. For example, the EU AI Act highlights transparency as a critical aspect. This is indicative of the shift toward more transparency in AI systems to build trust, facilitate accountability and ensure responsible deployment.

"For professionals like myself, and the overall industry in general, the journey toward full AI transparency is a challenging one, with its share of obstacles and complications," Masood said. "However, through the collective efforts of practitioners, researchers, policymakers and society at large, I'm optimistic that we can overcome these challenges and build AI systems that are not just powerful, but also responsible, accountable and, most importantly, trustworthy."

This article includes reporting and writing by David Petersson.