Trying to wrap your brain around AI? CMU has an AI stack for that

In this episode of 'Schooled in AI,' Andrew Moore, dean of the School of Computer Science at Carnegie Mellon University, talks about the benefits of the AI stack.

Hey, I'm Nicole Laskowski and this is Schooled in AI.

I introduced this podcast series a little while back. And just to refresh your memories, the series features Carnegie Mellon University (CMU) professors. We'll be talking about their AI research and how it might affect the enterprise. My aim is to give CIOs a window into what's coming next in AI -- and to get us talking about it.

Last time, my guest was Manuela Veloso. She's a professor in the School of Computer Science at CMU. We talked about how the overwhelming interest in AI and the enterprise thirst for talent is having a real impact on the school itself.

This time, I'm talking to Andrew Moore.

Andrew Moore: I'm basically your desk jockey administrator. I'm responsible for the School of Computer Science at Carnegie Mellon, which is absolutely the center of computer science on the planet right now.

Andrew Moore

Andrew Moore

Moore is the dean of the School of Computer Science. He started in this post back in 2014, and he comes to it with a pretty impressive background. Before becoming dean, he was a Google vice president of engineering, where he helped grow Google AdWords and Shopping systems. He's been a professor at CMU, he was the co-founder of a data mining company, he did his post-doc at the Massachusetts Institute of Technology. And he's an AI technology researcher. His work has been used by the likes of Pfizer, Homeland Security, Defense Advanced Research Projects Agency, Mars and Unilever -- this is all according to his website. I did ask him to tell me about a major achievement in his career, and he pointed to software he helped develop that's used to detect things like near-earth asteroids -- and it's software that's still in use today.

Moore: It always makes me feel really good to know that our software is running 24/7, watching the sky to detect bad stuff.

Editor's note: A full transcript of the interview, which has been edited for clarity, is below.

I wanted to talk to Moore about some of the AI basics -- like how the School of Computer Science defines artificial intelligence. That may seem simplistic, but the term is used so broadly that I think it's worth taking the time to make sure we all know what we're talking about when we talk about AI. So, our conversation started with a definition, it moved to CMU's AI stack, which I'll explain in a minute and which could help CIOs wrap their heads around this sprawling term. Here's Moore on how CMU defines AI.

Moore: We've tried to make it pretty focused. You're building an artificial intelligence system if you're building a system which does two things: It must understand the world and it must make smart decisions based on what it's understanding. So it's: understand plus smart decisions.

The process of understanding has two pieces. One, an AI system collects information through sensor technology to, say, hear or see a scene. Two, the data collected is run against something the system has learned in the past and that experiential knowledge is used to make sense of what is happening right now.

Moore: And then for it to be a useful AI system, it's not enough for it to just passively observe; it's got to either make decisions or help you, the human, make decisions.

Take self-driving cars as an example. Moore said that half the engineering work that goes into building autonomous vehicles is getting the car to understand its environment. That includes detecting static elements like lane markings. But it also means getting a grip on elements like other cars on the road, pedestrians and other potential dangers that require enough knowledge -- we're talking millions of hours of previous experiences -- to be able to predict their behavior.

Moore: That's where the machine learning came in. That's how it understands its state. And then based on this understanding of what's possibly going to happen, it's got to make the decision as to what it's going to do -- speed up, slow down, put on the brakes, change sensor modalities.

The AI stack

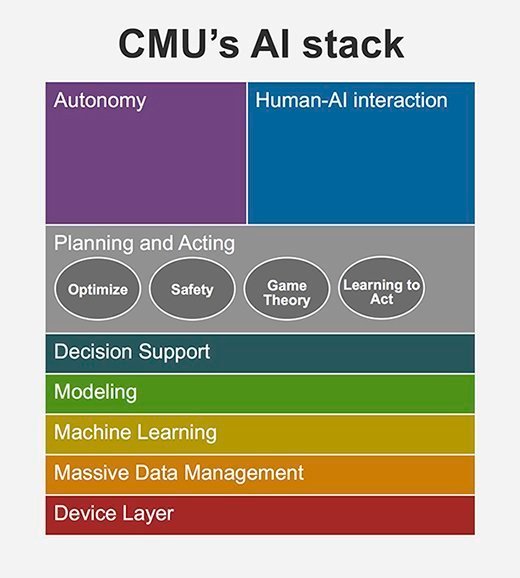

The School of Computer Science has also created an AI stack or it's essentially a blueprint. This helps to visualize and organize how all of the technologies that sit under the AI umbrella fit together. Moore said that's extremely valuable to the school. He explained with a simple analogy.

Moore: One way I always like to think about it is a large building with many floors, and on each floor, people are working on a problem and they're using results from the floors below them, and then they're passing up their work products to the floors above them to build for other things.

What it helps us do is, as we are hiring new faculty or helping fit grad students into an area that they're excited about, it really helps to have this notion of people at different levels helping each other. [For example,] if you happen to be doing research on great machine learning algorithms, you've immediately got people who can bring it into important end-use cases. … [You also have] people you're working with who can give you the computational support and the data-sharding support and the latency support that you need in order to make this stuff work.

And this AI stack, it could be an excellent resource for CIOs. You have to converse with AI vendors, you have to make sense of this AI vendor landscape and you have to explain to your business colleagues and your CEOs what this thing called artificial intelligence means.

So, what we're going to do next is we're going to dissect the School of Computer Science's AI stack. It's made up of seven layers.

At the top is the AI application layer -- these applications range from internet question and answering systems to robotics to safety control systems. Moore said AI applications are the biggest contributor to today's confusion around AI because they fall into two distinct categories but aren't usually talked about in this way.

Moore: The first bucket we would call autonomous systems. This is where the decision-making part of the AI is really having to make decisions for itself. Why would it do that? You do it if you're in a situation where you've got, say, a robot who's exploring caves in Afghanistan or a satellite that has to make decisions in less than a quarter of a second before it gets damaged by a solar flare -- these are the things where it just isn't possible for a human expert to be involved.

Then the other form of these applications is what we call cognitive assistance AI applications. And there, the work that's going on in the AI is to come up with advice for the user. And this is actually the area that you're going to see is much closer to the consumer-product end of all of this. Your company, your organization is adding value if it's able to give better advice. And if it's able to give it in a way that the people getting the advice feel very comfortable with it, they can see justifications for it and it's not alienating to them.

These applications essentially consume all of the layers below them in the rest of the stack. So, the next layers down are decision-making systems -- usually called the optimizer, planner or ERP system layer.

Moore: These are the places where, based on stuff coming from below, the system is actually making that decision: Where should I be sending my 5,000 Uber drivers in the city based on what I'm currently understanding about where they're likely to be needing pickups? Where should I be deploying my emergency workers based on what I understand of who's trapped in what building?

Next is a layer of technology Moore called "big linear programs," big dynamic programs or reinforcement learning -- names for the systems that enable the AI bots to autonomously decide on the best outcome.

Moore: Then we work our way further down the stack to the big machine learning systems, which help understand, based on the raw things that I'm sensing -- based on the raw pixels from the camera or based on the raw spectral audio information that's coming in -- what is the meaning of those sensors.

Under that are the data support systems that manage data and make it accessible for use with the lowest latency possible. Moore pointed to this layer and said some of the most important research in artificial intelligence is happening in this space.

Moore: One of the most important bits of AI research for practical use in my mind at the moment is work by one of our Carnegie Mellon faculty. He goes by the name Satya [M. Satyanarayanan] and his entire work is on making it so that these huge powerful optimizations that you have to do in real time [are done] on devices which are within 100 feet of your user. In other words, he's trying to bring cloud computing close to the users because for many use cases, that half-second latency of going back to a real cloud provider is just too slow to be safe.

At the very bottom of the stack, where a lot of advances in AI are taking place, is the layer of technology that underpins AI -- technologies like GPUs and flash memory. In the next few years, Moore said new technologies like quantum computing will likely be added to this layer.

Advice for CIOs

Beyond the basics -- beyond definitions and AI stacks -- Moore had some advice on where CIOs should begin with AI technology: Start with AI dialogue systems like Apple's Siri, Google Home or Amazon Alexa.

Moore: Now, the reason I suggest this is if you managed in-house to have some of your folks working on integrating with these sorts of AI dialogue systems, which the rest of the world is working so hard on, you'll be ready. Over the next half decade or so, you'll see much more integration of AI systems, which can do this understanding of the world and then acting on them. And by playing now and making sure that you've got team members who are very comfortable developing and giving new skills to something like Alexa, you're ready for what happens next.

Moore also talked about AI technologies he thinks CIOs should keep an eye on -- human activity detection and human emotional state monitoring. He said use cases around these technologies have had an impact on AI research at CMU in the last couple of years.

Moore: There are a number of very nice open source stacks, as well as a bunch of proprietary companies offering services for using computer vision to watch all of the people walking around a public area. Or if you have a user who's interacting with your system, perhaps over a video conference chat or in a medical application. There is now much more power for tracking the movements of all their facial muscles and noticing and understanding common tells for emotional state issues.

One use case is an airport concourse that wants to understand the general mood of customers after making changes to foot-traffic flow. Companies used things like surveys to gather this kind of data, but Moore said these systems are starting to provide real metrics. Another is in the medical field where AI systems can gauge the changes in a patient's psychological state by monitoring their conversational responses.

Moore: These are turning out to be so sensitive that, in some cases … the algorithms have been able to detect whether a treatment for depression is working before the patients themselves or their physicians are aware.

It's all very exciting, Moore said, but also terrifying. On his website, Moore said his No. 1 ambition in life is to be able to say, "Let me through. I'm a computer scientist!" The time for such phrases may be fast approaching.