With machine learning models, explainability is difficult and elusive

The enterprise's demand for explainable AI is merited, say experts, but the problem is more complicated than most of us understand and possibly unsolvable.

NEW YORK CITY -- The push by enterprises for explainable artificial intelligence is shining a light on one of the problematic aspects of machine learning models. That is, if the models operate in so-called black boxes, they don't give a business visibility into why they've arrived at the recommendations they do.

But, according to experts, the enterprise demand for explainable artificial intelligence overlooks a number of characteristics about current applications of AI, including the fact that not all machine learning models require the same level of interpretability.

"The importance of interpretability really depends on the downstream application," said Zoubin Ghahramani, professor of information engineering at the University of Cambridge and chief scientist at Uber Technologies Inc., during a press conference at the recent Artificial Intelligence Conference hosted by O'Reilly Media and Intel AI. A machine learning model that automatically captions an image would not need to be held to the same standards as machine learning models that determine how loans should be distributed, he contended.

Plus, companies pursuing interpretability may buy into a false impression that achieving such a feat automatically equates to trustworthiness -- when it doesn't.

"If we focus only on interpretability, we're missing ... the 15 other risks that [need to be addressed to achieve] real trustworthy AI," said Kathryn Hume, vice president of product and strategy at Integrate.ai, an enterprise AI software startup. And we mistakenly believe that cracking open the black box is the only way to peer inside when there are other methods, such as an outcomes-oriented approach that looks at specific distributions and outcomes, that could also provide "meaningful transparency," she said.

The fact that companies tend to oversimplify the problem of achieving trustworthy artificial intelligence, however, doesn't negate the need to make machine learning models easier to interpret, Hume and the other experts gathered at the press conference stressed.

"I think there are enough problems where we absolutely need to build transparency into the AI systems," said Tolga Kurtoglu, CEO at Xerox's PARC.

Indeed, Ghahramani pointed to examples, such as debugging an AI system or complying with GDPR, where transparency would be very helpful.

Instead, the experts suggested that interpretability be seen for what it is: one method of possibly many for building trustworthy artificial intelligence that is, by its very nature, a little murky itself.

"When we think about what interpretability might mean and when it's not a technical diagnosis, we're asking, 'Can we say why x input led to y output?'" Hume said. "And that's a causal question imposed upon a correlative system." In other words, usually not answerable in an explicit way.

Probabilistic machine learning

Deep learning -- arguably the most hotly pursued subsets of machine learning models -- is especially challenging for businesses accustomed to traditional analytics, Ghahramani said. The algorithms require incredible amounts of data and computation, they lack transparency, are poor at representing uncertainty, are difficult to trust and can be easily fooled, he said.

Ghahramani illustrated this by showing the audience two images -- a dog and a school bus. Initially, an image recognition algorithm successfully labeled the two images. "But then you add a little bit of pixel noise in a very particular way, and it confidently ... gets it wrong," he said. Indeed, the algorithm classified both images as ostrich.

It's a big problem, he said, "and so we really need machine learning systems that know what they don't know."

One of the ways to achieve this is a methodology called probabilistic machine learning. Probabilistic machine learning is a way of calculating for uncertainty. It uses Bayes' Rule, a mathematical formula that, very simply, calculates the probability of what's to come based on prior probabilities and the observable data generated by what happened.

"The process of going from your prior knowledge before observing the data to your posterior knowledge after observing the data is exactly learning," Ghahramani said. "And what you gain from that is information."

The Mindfulness Machine

Like most IT conferences, the O'Reilly show also had a marketplace of vendors showing off their wares. But enterprise tech wasn't the only thing on display.

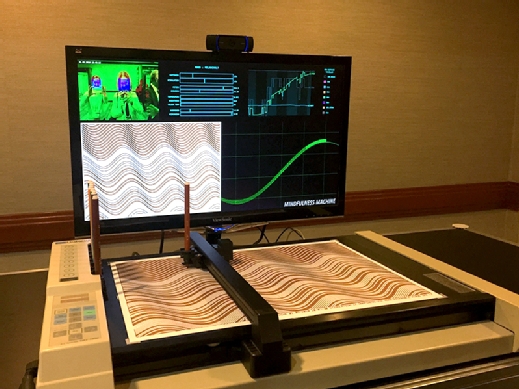

The Mindfulness Machine, an art installation by Seb Lee-Delisle, was also there. Originally commissioned by the Science Gallery Dublin for its "Humans Need Not Apply" exhibition, it sat quietly against a wall across from conference rooms where sessions were being held.

And it colored.

Indeed, the Mindfulness Machine is a robot equipped with sensors that track the surrounding environment, including weather, ambient noise and how many people are watching it. The data establishes a "mood," which then influences the colors the machine uses in the moment.

When I walked by on my way to lunch, the Mindfulness Machine was using an earthy brown to fill in a series of wavy lines. It's mood? Melancholy.