The shift to edge computing is happening fast -- here's why

Whether you call it the Fourth Industrial Revolution or digital transformation, enterprise IT is being changed fast, and forever, and edge computing is a big reason why.

Paradigm change. Bigger than cloud. Massively disruptive. The emergence of edge computing tends to be talked about in near-apocalyptic terms. What is edge computing, what's driving it, and what will be its mark on enterprise computing?

Let's start by taking a step back. Edge computing is not a new thing in the annals of enterprise computing. Since the introduction of the mainframe, enterprise computing can be summarized as the struggle between two antagonistic forces: the power of central processing versus the utility of putting compute close to where the data is generated -- that is, on the edge.

The mainframes that set this dialectic in motion gave the world its first opportunity to take advantage of high-speed calculations and data crunching. But these wondrous machines were inaccessible; they were housed in customized rooms and required highly specialized training. Suffice it to say, the computing eras that followed in rapid succession were responses to the limitations of that early version of centralized compute, which also kept evolving. From the desktops, laptops, smartphones and other innovations that extended the edge, to the local area networks, internet and public clouds that have industrialized the core, organizations have reaped extraordinary benefits from the tension between these two forces.

But something is happening right now that could irreversibly change the dynamic between centralized and edge computing. The periphery is being empowered by smart sensors and smart actuators at an unprecedented pace.

Call it the Fourth Industrial Revolution or digital transformation, the evidence for the growth in edge computing is overwhelming. While today only about 20% of enterprise data is being produced and processed outside of centralized data centers, by 2025, that is expected to rise to 75% and could reach 90%, according to Gartner. And there is no reason to assume the trend will abate.

New business models in a connected world

Said Tabet, Ph.D., lead technologist for IoT strategy at Dell EMC, said the rapid adoption of smart devices is driving the need for an edge computing strategy.

"What has changed? We are wearing connected watches. We have connected cars. We expect connected devices in the doctor's office, at the retail store and in our backyards," Tabet said. Compare this phenomenon with the advent of the web when there was a gap between its introduction and commercial adoption.

"The systems stayed on the outside," he said. "Now we are bringing systems in as we connect more of the world, whether in the enterprise or the industrial world or personal lives. With the acceleration of adoption, more business models are being created that did not exist before."

Industry experts point to a multitude of factors for this shift in balance between the core and the edge. They stem from both the positive attributes of edge computing and certain negative features of centralized computing.

Six drivers of edge computing

Physics plays a big part in the drive to edge computing, starting with latency. In any situation where the latency between sensing and responding needs to be extremely low -- for example, certain actions in autonomous driving -- it makes sense to put the computer that's processing the data from the sensors and actuators close to these devices; in the case of autonomous cars, it's imperative, as delays associated with going to the cloud could be life-threatening.

Cloud costs, latency give rise to edge computing solutions

As enterprises have gained a better understanding of cloud computing, the need for an edge computing strategy has come into sharper focus, said Stephan Biller, vice president for offering management at IBM Watson IoT.

"Just five years ago, people thought, 'We will have absolutely no edge; everything will be done in the cloud because it's so much cheaper, has so much more compute power and so forth,'" Biller said.

"And then we realized that doing this was actually cost prohibitive in some cases and, in many cases, too slow. If on a plant floor you need a reaction time of 25 milliseconds so somebody doesn't get hit by a robot, the cloud is simply not fast enough or even reliable enough to navigate those safety issues."

Bandwidth is another reason for the shift to edge computing. Edge sensors can generate enormous amounts of data that can easily exceed the bandwidth of an internet connection. Take, for example, the Toshiba corporate center in Kawasaki, outside Tokyo, an IoT testbed facility under the aegis of the Industrial Internet Consortium (IIC), Dell EMC and Toshiba. The complex of buildings, host to 8,000 daily occupants, has thousands of sensors sprinkled throughout it, measuring everything from temperature and humidity to elevator efficiency and conference room occupancy. The project, which analyzes 35,000 measured data points per minute, is using deep learning techniques to figure out how to secure and optimize the building's assets, with the larger aim of developing a blueprint for engineering other smart buildings, explained Said Tabet, Ph.D., the IIC lead on the Toshiba project and lead technologist for IoT strategy at Dell EMC. The data the sensors collect is being streamed to a command center on premises because there is no straightforward way of getting 300 TB of data per day from one building into the cloud. Nor is there any rational reason to do it because much of the time-series data from the sensors isn't valuable.

Persistent connectivity also drives compute to the edge. In situations where absolute reliability is required -- for example, monitoring a cardiac pacemaker -- an edge network under the company's complete control arguably offers more assurance than an internet connection that is not under the company's control.

Said Tabet

Said Tabet

A fourth reason is related to privacy, security and regulatory concerns. For processes that don't need to be connected to the internet, a local repository can be more secure and may not require the mandatory anonymizing of data that data protection laws stipulate. (See section below: "An edge computing solution designed to give seniors an edge.")

Recent breakthroughs in AI promise to be the strongest drivers of edge computing. Although the central processing of large data sets is fundamental to building an AI solution, the resulting machine-learning algorithms can be dispatched to the edge, allowing organizations to put more real-time intelligence in production at places where the data is being generated. Advisory firm ABI Research has predicted that AI inference on the edge will grow to 43% in 2023, up from just 6% in 2017, attributing the increase to "cheaper edge hardware, mission-critical applications, a lack of reliable and cost-effective connectivity options, and a desire to avoid expensive cloud implementations." (See section below: "AI at the edge: Optimizing the factory floor.")

This brings us to economics. Edge computing would not be taking off today if money were no object. For all the economies of scale offered by the cloud, the cost of storing and processing large sets of data is not negligible. As the data produced at the edge explodes, enterprises are finding it's not economical to move all the data back to a central processing facility, even if bandwidth and latency are not an issue. The Toshiba project's Tabet put it plainly: "The volume of the data, the latency, the speed are factors, but it is also the cost. We just can't afford to move the data to the cloud."

Piercing the fog: How to drive business value at the edge

As intuitive as the need for edge computing seems, driving business value on the edge will not be a trivial undertaking for IT or business leaders. For starters, definitions and formalisms are still fluid, and they tend to have many nuances.

Brian Hopkins

Brian Hopkins

Gartner, for example, has underscored that, unlike the cloud, the edge is not a style of computing but a topology "where the boundaries between the physical and digital worlds are blurred." Forrester Research defines edge computing as a "family of technologies that distributes data and services where they best optimize outcomes in a growing set of connected assets." But Forrester analyst Brian Hopkins stressed that edge should not be viewed as a technology to buy but rather understood as "a new compute paradigm."

There is general agreement that today's version of edge computing is not divorced from the cloud. Gartner analysts Thomas Bittman and Bob Gill designated "a connection to a broader digital world" as a defining characteristic of edge computing, explaining in an August 2018 report that a sensor connected only to a local computing device "isn't at the edge of anything. It's just a sensor, sending data to a local computer with which only local people can interact." The edge, they added, implies a core. IDC analyst Ashish Nadkarni, an enterprise infrastructure expert, also pointed to the "need to collect, store and analyze data in a cost-efficient manner" as a major factor in the enterprise's shift to the edge, but he argued that to maximize the edge's value, companies will need to merge their IT and operational technology architectures and practices.

Got edge strategy?

Jay Ferro

Jay Ferro

Veteran IT leader and angel investor Jay Ferro is hyperaware of edge computing.

"Do I have vendors telling me I need an edge strategy?" he said. "Daily. 'Jay, get the latest information in edge computing. Jay, are you taking advantage of IoT/edge? Buy this service, invest in this platform!' CIOs always get carpet bombed, but edge computing and IoT are probably what I get the most emails on."

Named CIO at Quikrete, the largest manufacturer of packed concrete in the United States, in September 2018, Ferro said that at present his focus is on "blocking and tackling" IT issues. But he believes edge computing --while not without risks -- will be a "liberating" force for technologists. "Bringing compute to where the business is happening is a good thing."

In short, there are as many ideas about edge computing out there as there are research houses -- and vendors. The latter, to be sure, are rushing to harness their products to this new computing mode -- products that will rapidly become obsolete because the field is evolving so fast. Edge deployments implemented in 2018 and 2019 will be replaced by new hardware and software by 2022, according to Gartner.

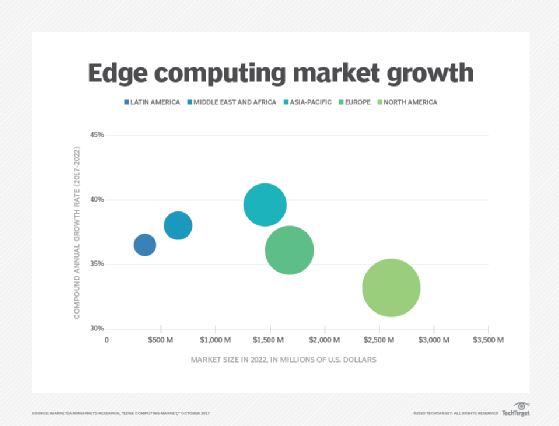

Indeed, the shift to edge computing, pegged to be a $7 billion market by 2022, will be a hardware and software bonanza for the IT industry, due in no small part to the plethora of technology in play between the edge and the central processing source.

"The world is not composed of just the edge and the center; there is a lot of compute that can happen in between, and that is what is unfortunately called fog computing," said computer scientist Richard Soley, executive director of the IIC and CEO and founder of Object Management Group, an industry standards consortium.

The distance between the centralized data center and the sensors, data actuators and other devices on the edge is not a straight line, but a many-layered ecosystem of regional data centers, micro data centers, cloudlets, edge servers, personal home assistants, edge controllers and embedded AI.

"The hard part about edge computing," Soley said, "comes down to, 'How do we architect our solution to best use our resources?'" He added that IT leaders will need to figure out how much compute can be done at the edge, how much in the center -- or along the way to the center -- and where the data pipes are small.

Richard Soley

Richard Soley

"It sounds simple, but it can be extremely complicated, especially if data resources change over time and if the computer resources change over time," Soley said.

Groups such as the IIC and projects like the Open Glossary of Edge Computing are working on developing standard definitions and architectures. Meanwhile, edge products tend to be highly customized and designed for specific cases.

Here are some examples of companies finding value at the edge, and advice from their vendor partners on getting an edge strategy off the ground.

AI, big data, IoT fuel energy giant's edge computing strategy

The power industry has been a long-time leader in edge computing. European electricity and gas provider Enel is an energy giant, with 73 million customers in 35 countries and a network that spans some 2.2 million kilometers. The Rome-based utility works with C3.ai, a recently minted "unicorn" that develops software to help grid operators like Enel aggregate and analyze large quantities of data from smart meters, sensors and other equipment.

In January, Enel announced a new development in its work on the edge. The company said it was implementing a platform from C3.ai that would integrate the consumption data from its huge array of smart meters and energy generation nodes -- 47 million sensors at last count -- with data from across its businesses. Data from enterprise applications, such as ERP and HR, and from operational systems -- including Cloudera, MongoDB, Oracle, PostgreSQL, SAP Hana and Siemens -- would be integrated and aggregated in a "unified virtual data lake." The company said this holistic, secure and near real-time view of Enel's complex data landscape would enable its 100-strong team of developers, data scientists and business analysts to rapidly develop AI applications. In his remarks on the news, Fabio Veronese, head of infrastructure at Enel, said the capability would drive "a new era of operational efficiencies."

"It's a very advanced edge-driven use case," said Ed Abbo, president and CTO at C3.ai."They're doing energy balancing and trading off of this information. They're doing forecasting on how much supply, for example, a particular wind turbine is going to have over the next 15 minutes, and how much power a customer will consume over that time period."

Ed Abbo

Ed Abbo

An edge strategy like this does not happen overnight. On Enel's part, the race to master the edge was more marathon than sprint, dating back nearly two decades to 2001, when the electricity and gas provider began replacing its Italian customers' conventional meters with digital Smart meters, an industry first according to the company. By 2006, Enel had 32 million sensors across Italy, enabling it to automate and manage a lot of its operations remotely, while giving end users a window into their power consumption. Smart meter installations followed in Spain, Chile and Brazil.

The connection between Enel and C3.ai, founded by Silicon Valley legend Tom Siebel, was also no easy affair. Their relationship began in 2013, when Abbo and Siebel traveled to Enel's Rome headquarters to secure an initial deal predicated on C3.ai's ability to load, map and process 50 billion data points -- a large amount back then. The high-stakes meeting, brokered by Enel's CIO and an Accenture consultant, was perking along but took an inauspicious turn when Enel's Veronese entered the room and cast doubt on C3.ai's ability to deliver the goods.

Known in the annals of the Enel and C3.ai partnership as the "wallet meeting," the showdown included a monetary bet between Siebel and Veronese, the details of which are described in an in-depth case study of Siebel by Stanford Business School faculty. A few months later, the deal was sealed with C3.ai's first product for the company, a fraud detection application that delivered 93% forecasting accuracy out of the gate, followed by a predictive maintenance application that today analyzes real-time network data, smart meter data, asset maintenance records and weather data to predict feeder failure.

Machine learning makes the edge smarter

C3.ai's predictive maintenance application for Enel integrates data from eight disparate systems (supervisory control and data acquisition, grid topology, weather, power quality, maintenance, workforce, work management and inventory). New features include using an advanced graph network approach and advanced machine learning to improve prediction.

Technical partnership, commitment from the top

When Veronese talks about an edge computing strategy today, it is in context of a full-blown digital transformation initiative Enel launched in 2016. "The challenge is going full scale -- how can you go at full scale with big data and AI in a big corporation, because only then can you grasp the digital impact," Veronese said at C3.ai's March 2019 user meeting in San Francisco.

Even for enterprises as sophisticated as Enel, scaling large edge projects typically requires a technical partner with domain expertise as well as experience -- or experienced partners -- in the enabling technologies, including cloud and AI. (C3.ai partners with AWS, Microsoft Azure and Google Cloud, among others.)

Vendor commitment to get the initiative off the ground can be critical to realizing business value. C3.ai, for example, helped train the Enel team of data scientists and application developers to ensure it could design and deliver the thousands of algorithms the company says will help drive earnings growth of billions of dollars over the next three years.

Miniaturization a 'step function' change in technology

As edge computing matures, C3.ai's Abbo said he expects it to be at least as disruptive as cloud. As the price of sensors and processing has dropped, he said edge applications are already unlocking new business -- and not just for industry giants capable of fielding large elite teams.

A good example, he said, is in the drone business, where lightweight machines -- relatively inexpensive and outfitted with Nvidia Jetson TX2 processors -- are analyzing video feeds in real time from numerous cameras attached to them.

"They're doing object recognition. You can set them up so they just follow you around," Abbo said. The ability of these drones to communicate with each other to solve a problem without having to go back to the cloud is already producing breakthroughs in AI "swarm intelligence."

"Wind back the clock 10 years, and that was not practical for many reasons, whether because of power inefficiency or processing capability," Abbo said. "So that's a step-function change in technology that's occurred, and people across industries are looking at it and saying, 'Well, how can we use that?' It's opened up possibilities that really didn't exist before."

Asked if these transformative types of big data and AI-enhanced edge projects are for big companies only, Abbo said the products being designed today are aiming to democratize AI at the edge by reducing the number of people needed to design and deploy algorithms. Of the C3 AI Suite, he asserted: "You can basically build a production pilot with four people: a data scientist, an app developer, a data integration specialist and somebody who is a subject matter domain expert."

Houman Behzadi

Houman Behzadi

Houman Behzadi, chief product officer at C3.ai, said that, in his experience, the biggest inhibitor to edge initiatives is not the technology, but lack of leadership, a perspective other experts and consultants echo. (See section below: "CIO opportunities and risks in the shift to edge computing.")

"Executive commitment is the most important thing that needs to be in place," Behzadi said. "This is transformation that requires change management, and the CEO needs to be driving it."

AI at the edge: Optimizing the factory floor

Stephan Biller, vice president for offering management at IBM Watson IoT, agreed that the latency and cost issues associated with cloud are driving edge computing. But without cloud's processing power and scale there is no edge computing, in Biller's view, at least not in the revolutionary terms it's being discussed today.

Case in point is an edge initiative at the LCD manufacturer Shenzhen China Star Optoelectronics Technology Co. (CSOT). Biller's IBM Watson team works with CSOT on automating and improving how the company inspects its LCD screens. Production quality insight, as it's called, typically involves countless hours of manpower, with factory shift upon shift of operators examining product images for flaws.

A company official who declined to be named said that visual inspection is arguably the most crucial part of the manufacturing process. "If we fail to spot defects before products are sent to device manufacturers, it could easily lead to costly product returns and rework, not to mention damage to your reputation."

A sophisticated deployment of cloud-enabled image-recognition software and edge tools, including high-definition cameras at the inspection point, has changed that process dramatically, Biller said, not only by cutting man-hours to minutes, but by automating continuous improvement in the inspection process.

"You can train artificial intelligence to know what the operator does -- this one is a good LCD, that is a bad LCD -- and over time you can calibrate the machine learning algorithm to predict how an operator will judge an image," Biller said. At that point, the company could replace the operator and likely improve accuracy in the bargain because the software does not get fatigued. Additionally, training operators can take four weeks or longer, while the AI training can be done in a couple of hours, he said.

Stephan Biller

Stephan Biller

How this happens "is a little complex," Biller said. The heavy lifting on the AI -- building the AI models that "score" the images -- requires a lot of compute power and is done in the cloud. (This could conceivably be done on location, but "then you would have an incredible amount of compute power in that factory that isn't actually needed," he contended, and that could even bring down operations.) Once the scoring is done, it is then downloaded to an edge computer working in runtime at the factory. The software provides a "confidence level" for every image (ranging from 0 to 100) that prompts an inspector to review images that don't pass muster. "If things change and we need to recalibrate the model, we go back to the cloud," Biller said, so there is continuous learning. "This is an edge to the cloud strategy -- in an application."

Edge fulfills cloud promise of system optimization

The benefits of a hybrid edge and cloud strategy extend beyond cutting manpower and improving accuracy, Biller argued. The software "learnings" at one plant -- while unique in some ways to that facility -- can be transferred to a company's other facilities, allowing for "knowledge-sharing that you couldn't have done if you had all [the scoring operations] in one factory," he said.

Indeed, the potential for system-level optimization that edge computing unlocks might be the paradigm's biggest calling card, according to Biller. Consider the edge technologies deployed in metropolitan areas to optimize traffic patterns versus the thousands of lines of code written into a single vehicle to automate driving, he said, drawing on his prior work on autonomous cars at General Motors.

"On the road, I can mainly optimize my own car. If I have radar, I might be able to optimize how I interact with the cars right next to me, and if these cars also have sensors that communicate, we can achieve a local optimization," he explained.

Low latency is critical -- in fact, it's a life-saving component. Developing a central system to manage traffic at city intersections also requires local devices to capture data, but analyzing the massive video and other data files needed to optimize the system can only practically be done in the cloud. Edge completes the cloud.

"Edge will fulfill a promise that cloud made and couldn't hold" because of latency and cost issues, Biller said. "Edge will complement cloud, and the hybrid will be revolutionary."

An edge computing solution designed to give seniors an edge

K4Connect, which describes itself as a "mission-centered technology company," is using edge and cloud computing to make smart technology a way of life in senior communities. Technology is not the problem this Raleigh, N.C., startup is aiming to solve, according to Kuldip Pabla, but it is a key element of the solution.

"At K4, we're dealing with older adults. If they are not served technology in the right way, they think it's their problem," said Pabla, senior vice president of product and engineering at K4Connect. "If we cannot respond in seconds or milliseconds, they assume they don't know how to use the technology, and they'll never use it again, thinking they are too dumb to make it work."

Kuldip Pabla

Kuldip Pabla

K4Connect's multimodal, modular software platform is designed to integrate many disparate smart edge devices and services -- from motion sensors and smart lights to fitness apps and voice assistants like Amazon Alexa -- all on a single system. More than that, the FusionOS, as its operating system is called, can run in the cloud, on a server or on the K4Box, a lower-powered local edge controller installed in residences.

Similar to, but more powerful than, a smartphone -- which also can pair up with smart sensors and provide some smart home functionality -- the K4Box edge controller is able to store a lot of information locally; when cloud connectivity is unavailable or low, it can serve locally. The edge controller not only addresses the latency issues that can discourage senior residents from using tech, but also mitigates privacy concerns, Pabla said. "If we don't bring private and personal data to the cloud, we'll have to worry less about protecting it."

More compute and storage coming to edge devices

Pabla has been operating on the front lines of mobile and cloud over a nearly three-decade career. Early on, he was among the wave of peer-to-peer computing enthusiasts working on how to use large devices to bring intelligence, or parity, to a small edge device like a flip phone. "I also spent a lot of time at the other extreme on the cloud side, scaling technology to serve millions of users simultaneously," he said. He sees the pendulum swinging again.

"Edges are making things decentralized. The constraints, as of today, are the compute power and storage on the controllers -- and the pricing," Pabla said. As more compute and storage are added, the price goes up, so he said K4Connect is working with Intel Original Design Manufacturers to design next-generation edge controllers that can be sold at the right price point -- and do more.

Pabla said the edge alerts done today are relatively simple. "The resident in room 204 usually goes to the bathroom at night three times, and she has already visited five times; she may be having problems," he said, as an example of an alert that might be sent to a senior center front desk. But as compute and storage barriers are lowered, many more scenarios -- and more consequential ones -- will open up, Pabla said. "We will make lots of health decisions -- emergency decisions -- locally."

CIO opportunities and risks in the shift to edge computing

How should CIOs and other IT leaders start thinking about an edge computing strategy? When the topic was raised at a March 2019 CIO conference in Boston, a few points kept coming up, beginning with the common refrain that edge computing is not necessarily new -- new-fangled definitions notwithstanding -- and it is not about technology per se.

"The discussion about edge computing starts with what can be gained from a business standpoint," said Tim Crawford, CIO strategic advisor at AVOA and host of the CIO In the Know (CIOitk) podcast. CIOs -- the transformational ones -- should be thinking about edge computing in the same way their business colleagues do, he said.

"The rest of the C-suite isn't having a conversation about what sensors or wearables to get involved in. They're asking, 'How can we engage customers?' or 'How can we bring telemetry from the device into operations?'" Crawford said. "Companies have to decide what is the right role for edge to play -- and if there is an appropriate place for it."

CIOs will likely need help from experts in IoT and other aspects of orchestrating the specialized devices, networks and algorithms in edge computing. But the technology will be less challenging for CIOs and their IT teams than architecting the edge strategy.

Tim Crawford

Tim Crawford

"It's more challenging from an architectural standpoint because many organizations have existing architectures, so they're trying to fit [edge components] in -- to take that square peg and put it in a round hole," Crawford said.

Isaac Sacolick, president of StarCIO and a former CIO at McGraw-Hill Construction and Businessweek, said that the shift to edge computing gets many CIOs very excited because it "puts them back in the infrastructure game."

"They truly love thinking about the problem -- the volume of data, the latency, the security aspects of where the data is going. 'Will 5G solve the latency problem, or do I need to put compute on the edge and have it solved there?'" said Sacolick, author of a book on digital transformation.

He advised CIOs to "leave the compute aside for a second" and think about the algorithm: "What algorithms do you need and where [do you need them] to do different types of decision-making?" A CIO in manufacturing, for example, with 15 plants globally, should be thinking about what types of algorithms are easier to implement and cheaper to operate on an edge device than trying to bring all that data back centrally. Security is also a big issue, Sacolick added, arguing that the edge is not necessarily more secure, edge vendor arguments notwithstanding. "Absent of wait-and-see and cost, I would rather have one place with the right level of security than 21 places to manage," he said.

Speaking of vendors, "It's a mess," Crawford said, noting that vendors "aren't doing themselves any favors" by following the gold rush. "We saw this with cloud washing -- everyone all of a sudden was in the cloud."

Isaac Sacolick

Isaac Sacolick

Sacolick agreed that CIOs likely will need technical help to execute their edge strategies. But, more important, they will need to be discerning in choosing their edge vendors.

"What I tell CIOs is that sometimes you have to have the expertise to build something, but more often than not you're going to have the expertise to know how to go shopping," he said.

The landscape of technology incumbents and startups in the AI and IoT space for edge computing alone is overwhelming. "There are hundreds of companies out there, and you already know as a CIO that a third won't be around in five years, a third will be acquired and a third might end up being really stable businesses," Sacolick said.

"That's the role of the CIO -- to look at these different factors and do something that makes sense," he added. "Those kinds of buying decisions, and how they think about the architecture and cost factors, that's where the CIO risk is."