tampatra - stock.adobe.com

Edge-to-cloud architecture turns key for digital initiatives

Enterprises pursuing digital transformation initiatives may need to reexamine their infrastructure architecture to bring compute and storage closer to data generated at the edge.

"The future is edge to cloud" resonated like a politician's oft-repeated campaign slogan throughout the keynotes and customer presentations at the recent HPE Discover 2021 conference.

Antonio Neri, HPE president and CEO, said an edge-to-cloud architecture would be a "strategic imperative" for every enterprise that is exiting the "data-rich but insight-poor" information era and moving into the "age of insight" to extract greater business value from its ever-growing volumes of data.

Enterprises have long transferred data from remote, branch and satellite sites to a core data center and, increasingly, also to the public cloud. But the digital transformation initiatives that many pursue to gain greater insight from their IoT, sensor and other far-flung data may force some to reexamine IT infrastructure architecture to facilitate data processing at the edge.

Shifting storage to the edge

Gartner predicts that more than 40% of enterprise storage infrastructure will be deployed at the edge by 2025, compared to only 15% today. By Gartner's definition, "edge storage" is responsible for the creation, analysis, processing and delivery of data services at or close to the location where the data is generated or consumed.

Industrial IoT, video surveillance and inference at the edge are just a few of the new workloads that require edge-to-cloud data processing, Julia Palmer, a research vice president at Gartner, noted. She said, as more enterprises move to the public cloud, they realize data is the hardest issue to address.

"Because of the speed of light and data gravity, not all of the data and storage can be or should be in the [public] cloud," Palmer said.

Palmer advised enterprises to choose storage platforms that are software-defined and sufficiently scalable to handle massive data volumes. She said storage products should also focus on data management, data transfer, density of performance and autonomous operations to ease administration.

HPE focuses on GreenLake as a service

For HPE, edge-to-cloud architecture centers on its GreenLake as-a-service platform designed to simplify on-premises infrastructure deployment, similar to the way the public cloud does. GreenLake customers can get compute, storage and networking resources when they need them, paying only for what they use. GreenLake Management Services are also available for monitoring, operations, administration and system optimization.

A new GreenLake Lighthouse turnkey option goes a step farther on the quest to reduce complexity, offering a standard configurable, cloud-native platform of integrated hardware, software and services with cloud-like ease of provisioning. Customers can run cloud services in their data centers, with a colocation provider or at the edge, and HPE supports virtual machine, container and bare-metal deployments.

"This flexibility of that architectural stack is a key building block to our strategy," Robert Christiansen, vice president of strategy in the office of the CTO at HPE, said in an interview.

Christiansen said customers could stack runtime software and applications on top of the GreenLake-provisioned infrastructure. HPE made a series of acquisitions over the last few years to put together a platform that includes its Ezmeral Container Platform to deploy and manage container-based applications; Ezmeral Data Fabric to ingest, store, manage and process data; and Ezmeral ML Ops to prepare data and build, train and deploy models for machine learning use cases.

Hybrid multi-cloud with variable edge

HPE's edge-to-cloud architectural model assumes a hybrid, multi-cloud environment with an edge that could be near, far, tiny or fat, depending on the sizing and capability needs of the customer, Christiansen said.

"What we know is that clients are wanting to take action where business is transacting -- on the manufacturing floor, in retail locations. This is decentralized healthcare, insurance, for example," Christiansen said. "The 'edge' word needs to be defined per client and industry."

Christiansen cited a healthcare customer operating in the U.S. and Hong Kong that wants a common data layer between the locations. The provider is focusing on AI and machine learning (ML) training with patient positioning data collected from a video analytics system used in the hospitals, he said.

Myra Davis

Myra Davis

Customer use cases

Another HPE customer, Texas Children's Hospital, now recognizes that data it has stored for 20 or more years presents a "rich world of opportunity" to gain greater insight into diseases and diagnostics and potentially even anticipate patient needs by targeted populations, said Myra Davis, the organization's chief information and innovation officer.

Stephen Voice

Stephen Voice

Stephen Voice, head of global digital health solutions at Novartis, said the pharmaceutical company is working with HPE to explore opportunities to study the data behind disease outbreaks and use insights for global health purposes. Some of the data could come from the myriad of edge devices that health workers use.

Sandra Nudelman

Sandra Nudelman

Another HPE customer, Wells Fargo, is seeing increasing numbers of customers bank on its mobile app from endpoints at or close to the edge. The financial institution now brings the data back to the central data center to meet regulatory requirements and generate insights, Sandra Nudelman, head of consumer data and engagement platforms at Wells Fargo, said. But moving forward, the bank plans new initiatives, including personalization, that will require compute to be closer to the edge device and to the customer to serve up data in real time, Nudelman said.

Spend time on the business case

Juan Orlandini, chief architect in the cloud and data center transformation division at IT consultancy Insight, offered up the following advice to companies that want to make better use of their edge data: "Make sure you have the right business case. Spend time on it. Ideate on it. Do the proof of concept. Prove the value to the business and then scale. Don't do it in any other order."

Juan Orlandini

Juan Orlandini

Market research studies show that more data will be generated at the edge than at the data center core in the near future, Orlandini noted. Higher-performing networking and connectivity options, such as 5G, Wi-Fi 6 and LoRaWAN, are making it cheaper to deploy tons of devices at the edge, he said.

Now that clients also have the computing horsepower and tools to analyze the data they're collecting, they know they can consider new ways to derive more value from it to transform their businesses or improve customer experiences, Orlandini said. But he is finding that many enterprises have a hard time figuring out where to start, and others have simply run out of ideas.

"It used to be the hard part was connecting the things, doing the math on it and managing a fleet of devices," Orlandini said. "Now the hard part is figuring out what you're going to do with the possibilities this opens up for you."

Infrastructure architecture options

Orlandini said, for supporting infrastructure, most organizations do not undertake a wholesale rip-and-replace of their existing systems but rather employ an edge-to-cloud architecture in select use cases. He said the as-a-service options from major vendors such as Cisco, Dell, HPE, NetApp and Pure Storage can help clients that have scalability concerns or want to get a project off the ground quicker.

Edge-to-cloud infrastructure alternatives also include the on-premises options of the public cloud providers -- notably AWS Outposts, Microsoft Azure Stack and Oracle Cloud@Customer -- that let customers dynamically move processing and storage to and from the cloud, Marc Staimer, president of Dragon Slayer Consulting, added.

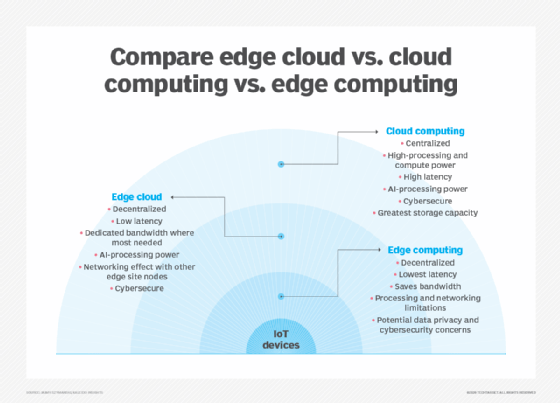

Staimer views the shift from a centralized to decentralized architecture as nothing more than the latest swing of a pendulum that has gone back and forth over the last 40 years. He said the driver for the latest edge-to-cloud approach is the need for real-time decision-making. Seconds and potentially milliseconds matter if an AI or ML system must determine if a package is a bomb or an autonomous vehicle, must distinguish if a street obstacle is a bag or a person, Staimer said.

"Until we can develop wormhole technology, the only way to deal with speed-of-light latency is to move the compute to the data. Every meter you go is going to add to your latency. There's no way around it," Staimer said. "It's a hell of a lot less effort to move a small amount of compute to the data than it is to move a whole lot of data to the compute."

Carol Sliwa is a TechTarget senior writer covering enterprise architecture, storage arrays and drives, and flash and memory technologies.