robotics

What is robotics?

Robotics is a branch of engineering and computer science that involves the conception, design, manufacture and operation of robots. The objective of the robotics field is to create intelligent machines that can assist humans in a variety of ways.

Robotics can take on a number of forms. A robot might resemble a human or be in the form of a robotic application, such as robotic process automation, which simulates how humans engage with software to perform repetitive, rules-based tasks.

While the field of robotics and exploration of the potential uses and functionality of robots have grown substantially in the 21st century, the idea certainly isn't new.

The early history of robotics

The term robotics is an extension of the word robot. One of its first uses came from Czech writer Karel Čapek, who used the word in his 1920 play, Rossum's Universal Robots.

This article is part of

A guide to artificial intelligence in the enterprise

However, in the 1940s, the Oxford English Dictionary credited science fiction author Isaac Asimov for being the first person to use the term. In Asimov's story, he suggested three principles to guide the behavior of autonomous robots and smart machines:

- Robots must never harm human beings.

- Robots must follow instructions from humans without violating rule 1.

- Robots must protect themselves without violating the other rules.

His three laws of robotics have survived to the present day. However, it wasn't until a couple of decades later, in 1961, that the first programmable robot -- called Unimate, derived from universal automation -- was created based on designs from the '50s to move scalding metal pieces from a die-cast machine. The Stanford Research Institute's robot dubbed Shakey followed suit in 1966 as the first mobile robot, thanks to software and hardware that enabled it to sense and grasp the environment, though in a limited capacity.

Robotics applications

Today, industrial robots, as well as many other types of robots, are used to perform repetitive tasks. They can take the form of a robotic arm, a collaborative robot (cobot), a robotic exoskeleton or traditional humanoid robots.

Industrial robots and robot arms are used by manufacturers and warehouses, such as those owned by Amazon and Best Buy.

To function, a combination of computer programming and algorithms, a remotely controlled manipulator, actuators, control systems -- action, processing and perception -- real-time sensors and an element of automation help to inform what a robot or robotic system does.

Some additional applications for robotics include the following:

- Home electronics. Vacuum cleaners and lawnmowers can be programmed to automatically perform tasks without human intervention.

- Home monitoring. This includes specific types of robots that can monitor home energy usage or provide home security monitoring services, such as Amazon Astro.

- Artificial intelligence (AI). Robotics is widely used in AI and machine learning (ML) processes, specifically for object recognition, natural language processing, predictive maintenance and process automation.

- Data science. The field of data science relies on robotics to perform tasks including data cleaning, data automation, data analytics and anomaly detection.

- Law enforcement and military. Both law enforcement and the military rely heavily on robotics, as it can be used for surveillance and reconnaissance missions. Robotics is also used to improve soldier mobility on the battlefield.

- Mechanical engineering. Robotics is widely used in manufacturing operations, such as the inspection of pipelines for corrosion and testing the structural integrity of buildings.

- Mechatronics. Robotics aids in the development of smart factories, robotics-assisted surgery devices and autonomous vehicles.

- Nanotechnology. Robotics is extensively used in the manufacturing of microelectromechanical systems, which is a process used to create tiny integrated systems.

- Bioengineering and healthcare. Surgical robots, assistive robots, lab robots and telemedicine robots are all examples of robotics used in the fields of healthcare and bioengineering.

- Aerospace. Robotics can be used for drilling, painting, coating, inspection and maintenance of aircraft components.

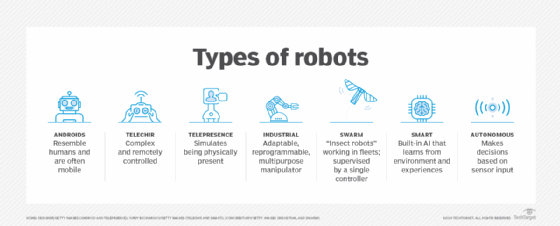

Types of robotics

Robots are designed to perform specific tasks and operate in different environments. The following are some common types of robots used across various industries:

- Industrial robots. Frequently used in manufacturing and warehouse settings, these large programmable robots are transforming the supply chain by performing tasks such as welding, painting, assembling and material handling.

- Service robots. These robots are used in a variety of fields in different scenarios, such as domestic chores, hospitality, retail and healthcare. Examples include cleaning robots, entertainment robots and personal assistance robots.

- Medical robots. These robots help with surgical procedures, rehabilitation and diagnostics in healthcare settings. Robotic surgery systems, exoskeletons and artificial limbs are a few examples of medical robots.

- Autonomous vehicles. These robots are mainly used for transportation purposes and can include self-driving cars, drones and autonomous delivery robots. They navigate and make decisions using advanced sensors and AI algorithms.

- Humanoid robots. These robots are programmed to imitate and mimic human movements and actions. They look humanlike and are employed in research, entertainment and human-robot interactions.

- Cobots. Contrary to the majority of other types of robots, which do their tasks alone or in entirely separated work environments, cobots can share workplaces with human employees, enabling them to work more productively. They're typically used to remove costly, dangerous or time-consuming tasks from routine workflows. Cobots can occasionally recognize and respond to human movement.

- Agricultural robots. These robots are used in farming and agricultural applications. They can plant, harvest, apply pesticides and check crop health.

- Exploration and space robots. These robots are used in missions to explore space as well as in harsh regions on Earth. Examples include underwater exploration robots and rovers used on Mars expeditions.

- Defense and military robots. These robots aid military tasks and operations including surveillance, bomb disposal and search-and-rescue missions. They're specifically designed to operate in unknown terrains.

- Educational robots. These robots are created to instruct and educate kids about robotics, programming and problem-solving. Kits and platforms for hands-on learning in academia are frequent examples of educational robots.

- Entertainment robots. Created for entertainment purposes, these robots come in the form of robotic pets, humanoid companions and interactive toys.

Machine learning in robotics

Machine learning and robotics intersect in a field known as robot learning. Robot learning is the study of techniques that enable a robot to acquire new knowledge or skills through ML algorithms.

Some applications that have been explored by robot learning include grasping objects, object categorization and even linguistic interaction with a human peer. Learning can happen through self-exploration or guidance from a human operator.

To learn, intelligent robots must accumulate facts through human input or sensors. Then, the robot's processing unit compares the newly acquired data with previously stored information to predict the best course of action based on the data it has acquired. However, it's important to understand that a robot can only solve problems that it's built to solve. It doesn't have general analytical abilities.

Some examples of current applications of machine learning in robotics include the following:

- Computer vision. Robots can perceive, identify and navigate their environments with the help of machine vision, which uses ML algorithms and sensors. Computer vision is used in a wide range of settings, including manufacturing procedures, such as material inspection and pattern and signature recognition.

- Self-supervised learning. By using large sets of data, robots can be taught to perform tasks without being specifically trained to do so, such as in neural networks. Self-supervised learning can be used to increase the ability of robots to adapt to changing environments.

- Imitation learning. This entails educating robots to replicate human behavior by demonstrating desirable actions to them. This can be used to improve the speed and accuracy of automated procedures.

- Assistive robotics. Machine learning can be used to create robotic devices that help people with daily tasks such as mobility and household duties. For example, wheelchair-mounted robot arms can offer greater independence to people with limited mobility in their upper extremities.

- Reinforcement learning. This entails teaching robots how to carry out challenging tasks through the use of trial-and-error techniques to make robotic systems more effective and efficient.

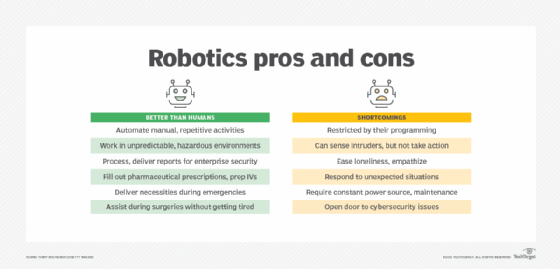

The pros and cons of robotics

Robotic systems are coveted in many industries because they can increase accuracy, reduce costs and increase safety for human beings.

Common advantages of robotics include the following:

- Safety. Safety is arguably one of robotics' greatest benefits, as many dangerous or unhealthy environments no longer require the human element. Examples include the nuclear industry, space, defense and maintenance. With robots or robotic systems, workers can avoid exposure to hazardous chemicals and even limit psychosocial and ergonomic health risks.

- Increased productivity. Robots don't readily become tired or worn out as humans do. They can work continuously without breaks while performing repetitive jobs, which boosts productivity.

- Accuracy. Robots can perform precise tasks with greater consistency and accuracy than humans can. This eliminates the risk of errors and inconsistencies.

- Flexibility. Robots can be programmed to carry out a variety of tasks and are easily adaptable to new use cases.

- Cost savings. By automating repetitive tasks, robots can reduce labor costs.

However, despite these benefits, robotics also comes with the following drawbacks:

- Task suitability. Certain tasks are simply better suited for humans -- for example, those jobs that require creativity, adaptability and critical decision-making skills.

- Economic problems. Since robots can perform most jobs that humans do with more precision, speed and accuracy, there's always a potential risk that they could eventually replace human jobs.

- Cost. Most robotic systems have a high initial cost. It can also cost a lot to repair and maintain robots.

- Increased dependency. Overreliance on robots can result in a decrease in human talents and problem-solving abilities as well as an increase in technological dependence.

- Security risks. There's always a risk of robotic devices getting hacked or hijacked, especially if they're being used for defense and security purposes.

- Power requirements. Robots consume a lot of energy and constant power to operate. Regular upkeep and maintenance are also needed to keep them in good working condition.

The future of robotics

Robots are becoming more efficient, flexible and autonomous as AI, machine learning and sensor technologies progress. They're predicted to play an increasingly essential role in a variety of industries and applications in the future. A study by Spherical Insights and Consulting estimated that the size of the global robotics market will increase from $25.82 billion in 2022 to $115.88 billion by 2032.

However, there are also possible concerns about employment losses associated with the exponential adoption of robotics across various industries. According to Oxford Economics, up to 20 million manufacturing jobs could be lost to robots by 2030. On the flip side, robots are likely to generate new professional opportunities in fields such as programming and maintenance, despite the looming possibility of job losses.

Manufacturing organizations are using robotics and AI to improve operations and boost productivity. Explore the 10 use cases of robotics in the manufacturing industry.